About a year ago, I started partitioning up my Calculus tests into three sections: Concepts, Mechanics, and Problem Solving. The point values for each are 25, 25, and 50 respectively. The Concepts items are intended to be ones where no calculations are to be performed; instead students answer questions, interpret meanings of results, and draw conclusions based only on graphs, tables, or verbal descriptions. The Mechanics items are just straight-up calculations with no context, like “take the derivative of “. The Problem-Solving items are a mix of conceptual and mechanical tasks and can be either instances of things the students have seen before (e.g. optimzation or related rates problems) or some novel situation that is related to, but not identical to, the things they’ve done on homework and so on.

I did this to stress to students that the main goal of taking a calculus class is to learn how to solve problems effectively, and that conceptual mastery and mechanical mastery, while different from and to some extent independent of each other, both flow into mastery of problem-solving like tributaries to a river. It also helps me identify specific areas of improvement; if the class’ Mechanics average is high but the Concepts average is low, it tells me we need to work more on Concepts.

I just gave my third (of four) tests to my two sections of Calculus, and for the first time I started paying attention to the relationships between the scores on each section, and it felt like there were some interesting relationships happening between the sections of the test. So I decided to do not only my usual boxplot analysis of the individual parts but to make three scatter plots, pairing off Mechanics vs. Concepts, Problem Solving vs. Concepts, and Mechanics vs. Problem Solving, and look for trends.

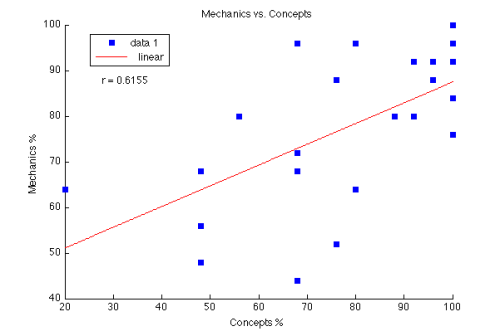

Here’s the plot for Mechanics vs. Concepts:

That r-value of 0.6155 is statistically significant at the 0.01 level. Likewise, here’s Problem Solving vs. Concepts:

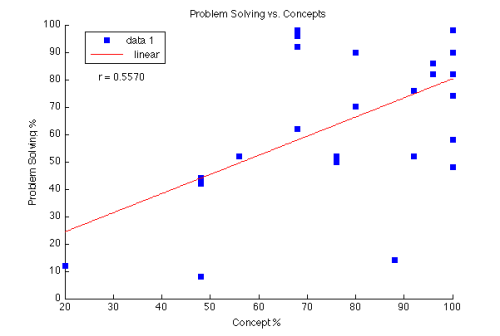

That r-value of 0.6155 is statistically significant at the 0.01 level. Likewise, here’s Problem Solving vs. Concepts:

The r-value here of 0.5570 is obviously less than the first one, but it’s still statistically significant at the 0.01 level.

The r-value here of 0.5570 is obviously less than the first one, but it’s still statistically significant at the 0.01 level.

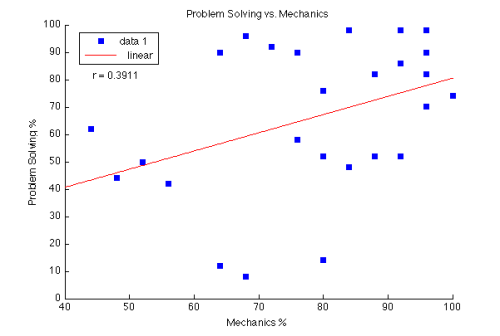

But check out the Problem Solving vs. Mechanics plot:

There’s a slight upward trend, but it looks disarrayed; and in fact the r = 0.3911 is significant only at the 0.05 level.

There’s a slight upward trend, but it looks disarrayed; and in fact the r = 0.3911 is significant only at the 0.05 level.

What all this suggests is that there is a stronger relationship between conceptual knowledge and mechanics, and between conceptual knowledge and problem solving skill, than there is between mechanical mastery and problem solving skill. In other words, while there appears to be some positive relationship between the ability simply to calculate and the ability to solve problems that involve calculation (are we clear on the difference between those two things?), the relationship between the ability to answer calculus questions involving no calculation and the ability to solve problems that do involve calculation is stronger — and so is the relationship between no-calculation problems and the ability to calculate, which seems really counterintuitive.

If this relationship holds in general — and I think that it does, and I’m not the only one — then clearly the environment most likely to teach calculus students how to be effective problem solvers is not the classroom primarily focused on computation. A healthy, interacting mixture of conceptual and mechanical work — with a primary emphasis on conceptual understanding — would seem to be what we need instead. The fact that this kind of environment stands in stark contrast to the typical calculus experience (both in the way we run our classes and the pedagogy implied in the books we choose) is something well worth considering.

Just to let you know, the link in the last paragraph is flawed.

Fixed. Thanks!

There’s a growing trend in professional development training classes (for post-college working adults) to teach “critical thinking.” It’s an acknowledgement that there’s an army of technical experts in our workforce today who can operate well in the weeds without ever looking up and beyond at the horizon. They can calculate but not, perhaps, in context.

Some of these “critical thinking” training offerings are as short as one-day workshops, intended to make critical thinkers out of technicians. It’s absurd, of course, to think that’s possible. Your observation and data seem to demonstrate mechanical proficiency and problem-solving, which I’m equating with critical thinking, aren’t as often found packaged together in a person, which would explain the rush to teach technical professionals to think critically. I thought it was futile before, but this bit of data makes me think so even more.

I would say that mechanical skill might involve critical thinking, but critical thinking certainly does not consist in mechanics. The Conceptual problems I have are much more aligned with critical thinking and they appear to be at the heart of everything.

Also problematic is the lack of an operational definition of “critical thinking” in the first place.

Those “r” values seem low, too low to me. Consider looking at the relationship between concepts plus mechanics combined, and problem-solving. Does it have a higher “r” value?

If you average the percentages for Concepts and Mechanics into one percentage score, then correlate that with the percentages for problem-solving, the r comes out to be 0.5399. Not stunningly high, but still statistically significant at the 0.01 level for a population this size (n = 26).

I wouldn’t expect much more than a 0.6 on this at any rate. The cognitive jump from simple mechanical or simple conceptual problems to problem-solving is big enough that students could be good at the former but not so good at the latter. Interestingly, the converse is also true — students can score well on problem-solving without being particularly strong in either the basic mechanical or basic conceptual tasks. I think that’s primarily because the problems on problem-solving section were one optimization problem and one related-rates problem — similar to many problems they’d worked before, so a practice effect might be in place.

Would you do the same in Calc II? Can you give some examples of conceptual questions at that level?

I don’t teach Calculus II at my college, but my colleague who does handle Calculus II has shown interest in structuring assessments in this way. I’ll ask him about what he’s done.

There is another correlation I would like to see. Your conclusion seems to be that you need both conceptual and mechanical to do problem solving, which makes sense. Could you correlate the sum of the grades on those sections with the score on the problem solving section, or some other equivalent, to demonstrate this?

There seems to be a group of about 7 who are doing quite poorly – remove them and it looks like the correlations tumble – just clouds of reasonably good points.

20/64/12

48/68/9

88/80/14 (what’s that about? ESL?)

68/44/62

48/48/42

76/53/50

48/56/51

Sounds like some good reasoning to use PBL 🙂

I may have to try this method of testing for high school kids… I like it!